Google’s documentation updates often appear subtle, yet they can signal important shifts in how search engines interact with websites. The recent clarification and update regarding Googlebot’s file size limit is one such change that has caught the attention of SEO professionals, developers, and digital publishers alike. While the concept of crawl limits is not new, clearer documentation about how much of a file Googlebot processes can significantly influence technical SEO strategies and content publishing practices.

TLDR: Google has updated its documentation to clarify how much of a webpage file Googlebot will crawl and process. This change emphasizes the importance of keeping critical content within the crawlable size limit to ensure proper indexing. Oversized HTML files may result in partially indexed content. Site owners should optimize structure, reduce unnecessary code, and prioritize essential content placement to adapt effectively.

The update sheds light on longstanding behaviors of Googlebot while reinforcing best practices in page optimization. Understanding what this means in practical terms can help websites maintain visibility, avoid indexing issues, and improve search performance.

Understanding Googlebot’s File Size Limit

Googlebot does not process an unlimited amount of data per page. According to updated documentation, there is a defined file size threshold for HTML and other crawlable resources. After Googlebot reaches this limit, it may stop processing additional content within that file. This does not necessarily mean the page will not be indexed, but rather that content beyond the limit might not be seen or evaluated.

Historically, many SEO professionals assumed this limitation existed, but the recent documentation update makes the guidance more explicit. The clarity reduces ambiguity and reinforces the importance of efficient page construction.

From a technical standpoint, this limit applies primarily to:

- HTML documents

- Rendered HTML after JavaScript execution

- Other supported text-based resources

Large, bloated pages filled with excessive scripts, inline CSS, or unnecessary markup are more likely to exceed the practical crawlable limit.

Why File Size Limits Matter for Crawling

Crawling is the first stage of search engine indexing. If Googlebot cannot access or process all relevant content on a page due to file size restrictions, that content may not be evaluated for ranking purposes. This makes file size not just a performance issue, but an indexing concern.

Several scenarios illustrate why this matters:

- Critical content placed low in the HTML structure may be ignored if the size limit is reached earlier.

- Large navigation menus or repeated template elements can consume a significant portion of crawlable bytes.

- Excessive inline structured data or embedded resources can crowd out primary content.

In competitive industries, even small indexing inefficiencies can translate into lost visibility. When high-value keywords appear in content located beyond the crawlable processing threshold, search engines might not interpret the page’s relevance correctly.

The Impact on Site Indexing Practices

The clarification around file size limits reinforces the principle that content prioritization within HTML structure matters. Sites that traditionally rely on long-scroll designs, infinite loading features, or content-heavy pages may need to reassess their approach.

Key indexing implications include:

- Content Hierarchy Becomes Critical

Important headings, primary copy, and structured data should appear early in the HTML. - Template Efficiency Gains Importance

Reducing unnecessary navigation duplication and excessive footer content can preserve valuable crawlable space. - Rendering Considerations Increase

JavaScript-heavy pages that generate large rendered DOM files could unintentionally push key content beyond the effective processing limit.

This is especially significant for enterprise websites, e-commerce platforms with extensive filtering systems, and large publishers with embedded media and dynamic content modules.

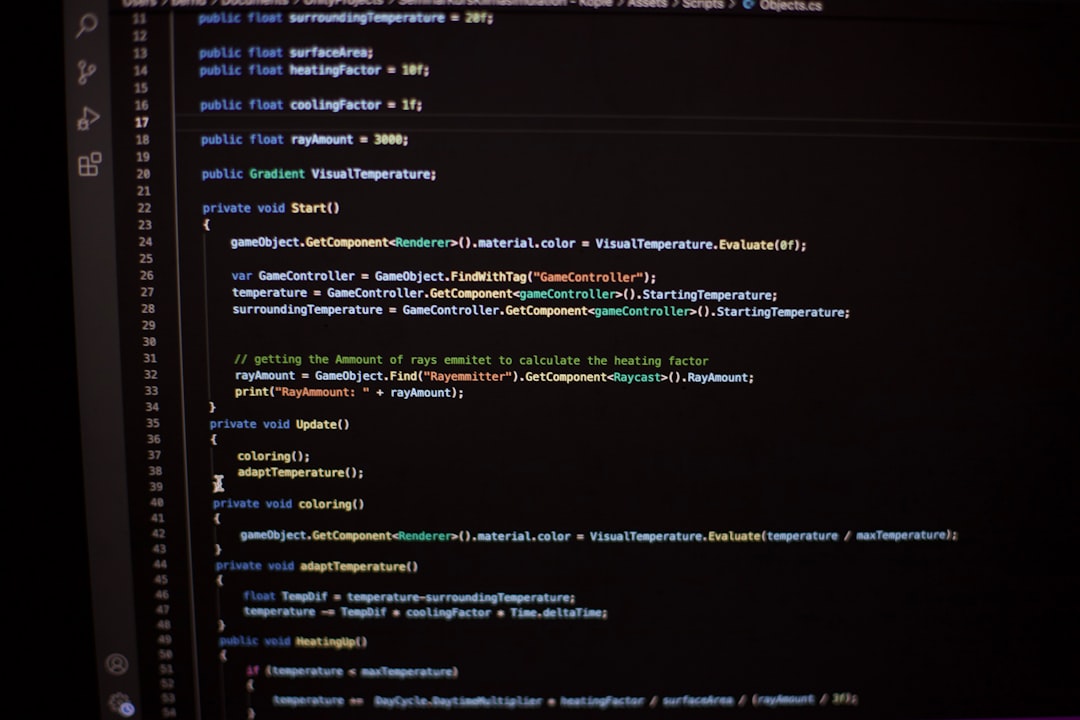

JavaScript Rendering and the File Size Question

Modern websites frequently rely on client-side rendering. When Googlebot renders JavaScript, it effectively processes the generated HTML output. If that rendered output becomes excessively large, the effective crawlable cap could still apply.

Developers must therefore think beyond raw HTML file size. They should evaluate:

- The size of the fully rendered DOM.

- How dynamically injected modules contribute to total page weight.

- Whether lazy-loaded content is discoverable via proper linking structures.

While lazy loading improves user experience and performance metrics, improper implementation can prevent search engines from encountering secondary content entirely.

Performance Optimization and SEO Alignment

The documentation update bridges technical SEO and web performance disciplines. Reducing file size aligns with:

- Core Web Vitals optimization

- Faster crawl efficiency

- Improved server resource management

Websites operating at scale must consider crawl budget allocation. When pages are excessively large, Googlebot may spend more time processing fewer URLs. Streamlined page construction supports broader and more efficient site crawling.

Best practices include:

- Minifying HTML, CSS, and JavaScript.

- Removing unused scripts and deprecated code.

- Limiting inline CSS where possible.

- Reducing repetitive boilerplate elements.

- Paginating extremely long content sections.

Content Placement Strategy in Light of the Limit

The structural layout of content becomes strategically important. Developers and SEO specialists should collaborate to ensure that essential information appears prominently in the HTML sequence.

Recommended placement priorities:

- Primary H1 heading near the top of the document.

- Core topic introduction early in the body.

- Critical internal links inserted before large decorative sections.

- Structured data embedded efficiently and concisely.

This does not mean reducing depth of content. Rather, it means structuring expansive resources in a way that ensures the most important signals are encountered first within the crawlable portion of the file.

E-Commerce and Large Publisher Considerations

E-commerce platforms face special challenges. Category pages often include dynamic filters, product grids, user reviews, FAQs, and recommendation modules. If poorly optimized, these elements can dramatically inflate file size.

For publishers, long-form investigative pieces with embedded multimedia and advertising scripts may approach processing thresholds. Solutions may include:

- Modular loading of secondary components.

- Reducing DOM depth.

- Ensuring advertisements load asynchronously.

- Breaking ultra-long articles into structured multi-page formats when appropriate.

These adjustments help preserve indexability while maintaining strong user engagement.

What This Documentation Update Really Signals

While the file size limit itself may not be entirely new, Google’s decision to clarify it signals an increased emphasis on efficient crawling and clean architecture. As web experiences become more complex, search engines must maintain practical limitations to manage resources at scale.

For site owners, the takeaway is not to panic about minor size discrepancies. Instead, the focus should shift toward proactive auditing and alignment between development and SEO teams.

Helpful auditing steps include:

- Regularly reviewing rendered HTML size.

- Testing with Google Search Console URL Inspection.

- Monitoring crawl stats reports.

- Conducting periodic technical SEO audits.

By incorporating these practices, websites can remain resilient regardless of evolving documentation refinements.

Conclusion

The updated Googlebot file size limit documentation reinforces a fundamental truth: clean, structured, and efficient code supports better indexing. Although most well-optimized websites may not experience sudden drops due to this clarification, those operating with bloated templates or excessive rendered DOM sizes should take notice.

Ultimately, the change encourages a healthier web ecosystem. When developers prioritize streamlined architecture and content hierarchy, both users and search engines benefit. As crawling technologies evolve, clarity on processing limits provides valuable guardrails for sustainable growth in search visibility.

Frequently Asked Questions (FAQ)

1. What is Googlebot’s file size limit?

Googlebot processes only a certain amount of data per file. Once the limit is reached, any additional content beyond that threshold may not be processed or indexed.

2. Does exceeding the file size limit prevent a page from being indexed?

No. The page can still be indexed, but content beyond the limit may not be evaluated, potentially affecting rankings for keywords located in that portion.

3. Does the limit apply to rendered JavaScript pages?

Yes. The effective size of the rendered HTML after JavaScript execution can influence how much content Googlebot processes.

4. How can site owners check their page size?

They can inspect rendered HTML through browser developer tools, use SEO auditing tools, and review page responses via Google Search Console’s URL Inspection tool.

5. Is this update a penalty?

No. It is a documentation clarification, not a new penalty. However, it reinforces existing technical best practices.

6. What types of sites are most affected?

Large e-commerce platforms, media publishers, and JavaScript-heavy web applications are more likely to encounter issues if pages are not carefully optimized.

7. What is the best long-term solution?

Maintain lean, efficient code; prioritize essential content placement; and conduct regular technical audits to ensure crawlability aligns with Google’s processing behavior.