The newest iteration of Apple’s flagship smartphone, the iPhone 16, is here—and it’s smarter than ever. With the spotlight on Visual Intelligence, Apple is once again redefining how users interact with their smartphones using enhanced computational photography, real-time object recognition, and advanced scene understanding. These features aren’t just cool—they’re incredibly useful, making everyday tasks quicker, easier, and far more intuitive.

What is Visual Intelligence?

Visual Intelligence (VI) refers to a smartphone’s ability to understand, interpret, and respond to the visual environment using advanced machine learning and AI-driven algorithms. On the iPhone 16, Apple has supercharged VI capabilities using its brand new A18 Bionic chip and upgraded Neural Engine, allowing the device to process complex visual data in real-time. This translates to smarter photography, better augmented reality, and a deeper understanding of your surroundings.

Top Visual Intelligence Features of the iPhone 16

Here’s a rundown of the standout VI tools and enhancements that Apple brings with this model:

- Live Object Recognition: The iPhone 16 can now recognize over 500 types of objects and scenes in real-time, from exotic pets to obscure mechanical tools. Simply point your camera, and it provides instant information or suggested actions.

- Smart Capture: With intelligent framing, enhanced auto-focus, and real-time lighting corrections, Smart Capture ensures every photo is optimized before you even press the shutter button. It’s like having a professional photographer baked into your phone.

- Visual Look-Up Expanded: A feature introduced in earlier models, Visual Look-Up has been given a serious upgrade. You can now tap on items in your photos for instant contextual searches—such as identifying a landmark in the background of your selfie or getting shopping links for clothes seen in a picture.

- Scene Context Actions: The new VI system recognizes the context of what’s happening in your photos or camera view and suggests helpful actions, like calling a business if a storefront is detected, or translating menus in real time when abroad.

- Personalized Visual Experiences: Apple has implemented on-device learning that tailors results based on your interests and usage patterns, leading to faster and more relevant recommendations and actions.

How Does It Work?

Thanks to the refined A18 Bionic chip and a 16-core Neural Engine, the iPhone 16 can process more than 17 trillion operations per second. This immense processing power is utilized not just for speed, but for precision. The phone seamlessly meshes data from the camera, accelerometer, gyroscope, and even audio sensors to create a coherent understanding of its environment.

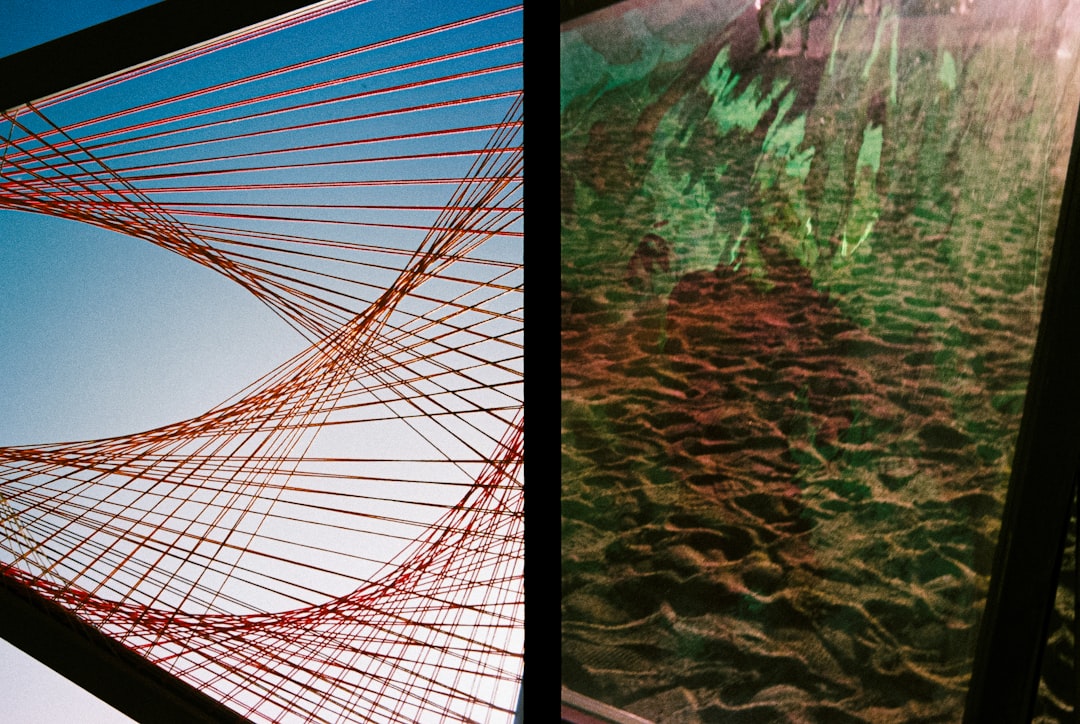

For instance, when you’re pointing the camera at a work of art, the VI system analyzes shape, color palette, and style in milliseconds to match it with museum databases or online image repositories.

Real-World Applications

Visual Intelligence isn’t just about upgrading the camera—it has practical implications across multiple use cases:

- Shopping: See something you like? Point your iPhone 16 at it and instantly see where to buy it, compare prices, or find similar items.

- Translation: Traveling abroad? Real-time translation of road signs, menus, and instructions happens fluidly as you move your camera over text.

- Education: Kid doesn’t know what bug they found in the backyard? The iPhone 16 could recognize the species and display a fun fact file instantly.

- Accessibility: The device can read aloud visual cues to users with visual impairments, making it a powerful assistive technology tool.

Privacy and On-Device Intelligence

One of the most impressive aspects of Apple’s VI system is its commitment to privacy. Almost all processing is handled on-device, meaning your photos and data stay private and aren’t transmitted to external servers unless you choose to share them. This on-device capability ensures not only security but also quicker responsiveness and reduced lag.

What’s Next?

Apple has laid a strong foundation for the future of Visual Intelligence. Developers are already integrating VI frameworks into their apps, expanding the use of iPhone 16’s features into health, fitness, education, and home automation. We’re just beginning to see the new opportunities that arise when your phone understands the world as well as you do.

The iPhone 16 is more than just a high-end smartphone—it’s a lens through which the digital and real worlds blend more seamlessly than ever before. With visual intelligence leading the way, the boundaries of what your phone can “see” and “understand” are truly being redefined.